Adopting Agentic AI for L1 support offers several compelling benefits that traditional chatbots and human agents struggle to match:

SUMMARY

Agentic AI is set to transform the customer service and support landscape.

Can it eliminate Level 1 support as we know it? Let’s discuss.

“By 2029, agentic AI will autonomously resolve 80% of common customer service issues without human intervention, leading to a 30% reduction in operational costs, according to Gartner, Inc.“

For decades, Level 1 (L1) support has been the frontline of customer service and IT helpdesks, handling basic troubleshooting, password resets, and frequently asked questions. While the traditional L1 support model has served businesses well, it is not without inefficiencies. Long wait times, high operational costs, and human errors often plague this tier of support.

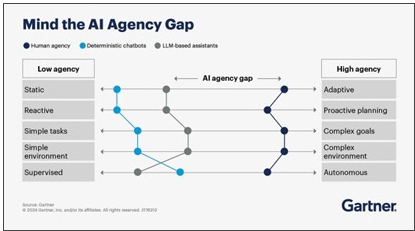

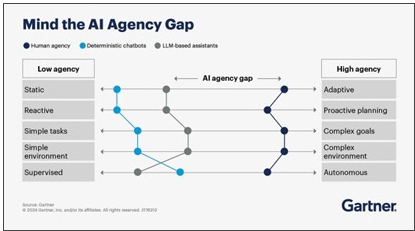

With the advent of AI-driven automation, chatbots have taken over many repetitive tasks previously handled by L1 agents. However, chatbots have their limitations, struggling with complex queries and failing to adapt dynamically to unique customer issues. This is where Agentic AI enters the picture. Unlike rule-based or pre-programmed chatbots, Agentic AI possesses decision-making capabilities, allowing it to function autonomously and improve over time. They have a goal-oriented behavior. Could Agentic AI finally eliminate the need for traditional L1 support altogether? Let’s explore.

Is the Current Process Efficient Enough?

To understand the potential of Agentic AI in replacing L1 support, it is essential to first assess the efficiency of the current system. Most companies rely on a tiered support structure, where L1 support acts as the first responder to customer inquiries. Their primary responsibilities include logging customer issues, resolving simple problems, and escalating complex cases to Level 2 (L2) or Level 3 (L3) support.

However, as customer expectations rise and businesses seek more cost-effective, scalable solutions, the efficiency of traditional L1 support is being called into question. While the system has served organizations well, its limitations are becoming more apparent, leading many to explore AI-driven alternatives.

L1 support agents frequently deal with the same issues—password resets, connectivity problems, and general FAQs. Over time, this monotony leads to fatigue and high turnover rates, increasing recruitment and training costs for companies.

Human-driven L1 support struggles to scale effectively, especially during peak periods when customer inquiries surge. Delays and long wait times frustrate customers, reducing satisfaction and damaging brand reputation.

L1 agents often lack the problem-solving depth required to handle certain customer issues, leading to unnecessary escalations to L2 and L3 support teams. Human agents rely on standard operating procedures and may struggle with unstructured queries. This clogs the system, creating inefficiencies in higher-tier support.

Maintaining a large workforce for L1 support requires significant investment in salaries, training, and infrastructure. Sometimes even basic troubleshooting can take significant time. With businesses increasingly looking to cut costs, the financial burden of human-led L1 support is difficult to justify.

Additionally, different agents may provide varied solutions, leading to inconsistent customer experiences.

While chatbots have been introduced to mitigate some of these issues—mostly those jobs which are definite and repetitive in nature—they are far from a perfect solution.

Limitations Addressed by Chatbots

AI-powered chatbots were introduced to automate simple tasks and reduce the burden on human agents. They are capable of handling tasks such as FAQs, resetting passwords, and guiding users through basic troubleshooting steps. The benefits of using chatbots in the process are 24/7 availability, instantaneous responses, and consistency in service. Unlike human agents, chatbots can provide round-the-clock support, process queries immediately, reducing wait times, and provide standardized responses, ensuring uniformity in support quality.

However, chatbots have significant drawbacks. First is limited understanding of complex issues. Chatbots are unable to handle nuanced or multi-faceted problems effectively. Most chatbots follow pre-defined scripts, making them ineffective when confronted with novel queries. Customers often feel frustrated when a chatbot fails to understand their problem and loops through the same set of responses.

Why do we need Agentic AI?

The limitations of chatbots indicate the need for a more intelligent and adaptable solution. Agentic AI steps in to bridge the gap by functioning beyond the rigid frameworks of traditional automation.

Unlike conventional chatbots, Agentic AI possesses decision-making capabilities, allowing it to analyze context, learn from past interactions, and autonomously handle diverse queries. Its advantages over standard chatbots include:

“I’ve always thought of AI as the most profound technology humanity is working on… more profound than fire or electricity or anything that we’ve done in the past.“

Sundar Pichai, CEO of Alphabet

Agentic AI: The Future of Smarter Support, Faster Resolution

Adopting Agentic AI for L1 support offers several compelling benefits that traditional chatbots and human agents struggle to match:

As we understand, Agentic AI presents a transformative approach to L1 support by bridging the gap between automation and human-like decision-making. By leveraging contextual understanding, autonomous decision-making, and continuous learning, Agentic AI has the potential to eliminate the need for traditional L1 support while enhancing efficiency, reducing costs, and improving customer satisfaction.

Currently, a big gap exists between current LLM-based assistants and full-fledged AI agents, but this gap will gradually decrease as we learn how to build, govern, and trust agentic AI solutions.

As businesses strive for better, faster, and more cost-effective customer support solutions, embracing Agentic AI appears to be the logical next step. While human agents will still play a role in advanced troubleshooting and emotional intelligence-driven interactions, L1 support as we know it may soon become a thing of the past.

Author

Shubhangi Singh, Sr. Business Analyst

Shubhangi Singh is a seasoned business analyst with

Chainyard with over 10 years of experience in business analysis and digital transformation. She holds an MBA from IIM Lucknow and a BE in Electronics and Communication from VTU, Belgaum. She is also a Certified Scrum Product Owner (CSPO) and AWS Certified Cloud Practitioner.

Did you know that Gartner predicts 75% of businesses will use generative AI to create synthetic customer data by 2026— up from less than 5% in 2023? Organizations must embrace this shift or risk falling behind due to change anxiety.

As GenAI adoption accelerates and industries adapt to new possibilities, testing processes are also poised for transformation. A key aspect of this evolution is synthetic data generation, which not only enhances test data preparation but also helps organizations navigate complex data laws and regulations.

Synthetic data plays a crucial role in testing and training machine learning models as well as in regular testing, particularly in scenarios where real data is scarce or highly sensitive, such as in finance and healthcare. In fact, Gartner predicts that by 2030, synthetic data will surpass real data in AI training, underscoring its growing importance.

Challenges for Test Data Preparation

Test data preparation plays a crucial role in IT and D&A operations, ensuring software applications and security systems undergo rigorous testing before deployment. However, traditional methods present significant challenges.

Many organizations still rely on manual data entry, which, while customizable, is slow, error-prone, and lacks scalability. Others extract data from production environments, providing realistic datasets but posing security and compliance risks. Automated tools offer efficiency but often fail to generate dynamic and contextually accurate data.

A Gartner report highlights that 60% of organizations adopt synthetic data due to real-world data accessibility issues, 57% cite complexity, and 51% struggle with data availability. Quality and consistency remain a concern—poor test data can introduce bias, leading to unreliable test cases. The challenge grows as IT teams manage massive datasets across multiple environments.

Data privacy laws like GDPR and HIPAA further restrict access to real-world data, complicating compliance. Without centralized test data management, inefficiencies, redundancies, and security vulnerabilities emerge. Additionally, IT workflows involve structured and unstructured data—ranging from text to images—adding complexity. Traditional approaches also risk overlooking critical edge cases, leading to unexpected failures.

As IT and Analytics landscapes evolve, organizations must explore innovative solutions like Generative AI to streamline test data preparation while ensuring security and compliance.

Synthetic data: A compelling solution for all

Generative AI (Gen AI) offers an innovative approach to test data preparation by leveraging deep learning and automation. Here’s how it addresses the key challenges:

Improving Data Quality and Consistency: AI-driven test data generation ensures accuracy, completeness, and realism, eliminating inconsistencies across different environments for large volume of data. Special measures can be introduced to eliminate biases in data. This makes handling test data management more feasible.

Enhancing Data Privacy and Regulatory Compliance: Our experience with Trust Your Supplier (TYS) and other platforms enabled us to create synthetic test data that mimics real-world data without exposing sensitive information, ensuring privacy compliance. For instance, it enabled us to adhere to GDPR for TYS, HIPAA for one of our US based healthcare organizations. We use AI to automate data masking, anonymization, and compliance tracking to meet industry regulations.

Facilitating Centralized Test Data Management: AI-powered platforms centralize test data storage, improve access control, and reduce redundancy. This helps in saving efforts for preparing data for each test environment. AI-based encryption and anomaly detection enhance data protection for stored test data

Managing Complex Business Processes in Multiple Data Modes: AI-generated test data accurately captures real-world IT scenarios, enhancing test coverage. AI can generate structured and unstructured test data, ensuring comprehensive testing across diverse IT environments from multiple sources.

Uncovering Overlooked Insights: AI identifies hidden patterns and edge cases, improving test scenario coverage and reducing unexpected failures.

With synthetic data, as an organization for our product Trust Your Supplier(TYS) and clients we achieved efficiency and speed as AI automates test data generation significantly reducing manual effort and accelerating the testing process. As it reduces dependency on production data by generating near to realistic data, compliance risk, exposure risk and operational costs is reduced. It can handle large-scale data generation without human intervention, adapting to testing requirements dynamically. This data includes diverse scenarios, improving software reliability and performance. With these benefits, technological advancements and data regulations, it’s evident that organizations will be compelled to shift to use of synthetic data sooner or later-with organizations dealing with sensitive data leading the front.

Current Limitations and Redressal While Using Generative AI

While Generative AI (Gen AI) offers transformative potential in test data preparation, its implementation comes with notable challenges that organizations must navigate carefully.

One key concern is biased performance—AI models trained on skewed datasets risk reinforcing inaccuracies. To ensure fairness, regular retraining with diverse and representative data sets is essential.

Additionally, ethical and legal constraints pose hurdles. AI-generated test data must align with regulatory frameworks such as GDPR and HIPAA, requiring transparent governance policies to maintain accountability and compliance.

Security risks also loom large. AI models handling sensitive test data can become targets for cyber threats. Implementing robust encryption, secure training methodologies, and stringent access controls is crucial to mitigating vulnerabilities.

Moreover, model performance issues can arise when AI struggles with domain-specific complexities. Fine-tuning models with industry-specific datasets and continuous monitoring can significantly enhance reliability and precision.

As organizations increasingly integrate Gen AI into IT workflows, a balanced approach—leveraging its strengths while proactively addressing these challenges—will be key to unlocking its full potential in test data preparation.

Future Scope of Generative AI in Test Data Preparation

The future of Generative AI (Gen AI) in test data preparation is poised for rapid advancements, promising to revolutionize workflows with enhanced security, efficiency, and compliance.

One key innovation is Explainable AI, which aims to increase transparency by providing insights into how test data is generated, ensuring greater trust in AI-driven processes. Federated Learning is another breakthrough, allowing AI models to learn from multiple sources without compromising data privacy, significantly bolstering security measures.

AI Agent driven compliance auditing is set to automate regulatory tracking, reducing the burden of manual oversight in test data management. Meanwhile, self-adaptive test data generation will enable AI models to evolve dynamically based on real-time feedback, improving the accuracy and relevance of generated datasets.

Seamless integration with DevOps pipelines will further streamline test data provisioning within CI/CD workflows, minimizing deployment risks and accelerating software delivery cycles.

As organizations continue to embrace Gen AI, these innovations will drive greater efficiency, stronger security, improved ML model performance, and seamless regulatory compliance. With AI technology rapidly evolving, its role in IT and data & analytics (D&A) will only expand, transforming test data management for the better.

Author

Shubhangi Singh, Sr. Business Analyst

Shubhangi Singh is a seasoned business analyst with

Chainyard with over 10 years of experience in business analysis and digital transformation. She holds an MBA from IIM Lucknow and a BE in Electronics and Communication from VTU, Belgaum. She is also a Certified Scrum Product Owner (CSPO) and AWS Certified Cloud Practitioner.

Originally published on LinkedIn and written by Chainyard CTO Mohan Venkataraman. Read the full article.

The article discusses the potential and challenges of using Generative Adversarial Networks (GANs) in enterprise applications. It highlights how GANs can revolutionize industries by generating realistic data, improving design processes, and enhancing decision-making through synthetic data generation.

The article also addresses the technical complexities and ethical considerations involved in deploying GANs at an enterprise level, emphasizing the need for robust frameworks and governance to manage these advanced AI technologies effectively.

It discusses how GANs, which are typically used in image generation and enhancement, can be applied to various business processes. These include data augmentation for training machine learning models, generating synthetic data for testing, enhancing cybersecurity by detecting anomalies, and improving quality control in manufacturing. The article emphasizes the transformative impact GANs can have on improving efficiency and innovation within enterprises.

Want to learn more about how Chainyard is transforming enterprises? Talk to an expert.

Originally published on LinkedIn and written by Chainyard CTO Mohan Venkataraman. Read the full article.

The article discusses the increasing interest in and adoption of generative AI, particularly following the release of Chat GPT in November 2022. It emphasizes the importance of responsible and ethical implementation of AI technologies, considering their potential social impact, security issues, and cost implications. Enterprises are about utilizing generative AI for various purposes such as gaining insights, generating reports, negotiating contracts, and making predictions.

The article proposes leveraging Distributed Ledger Technologies (DLTs), such as blockchain, to establish a reliable AI service within enterprises. It presents a conceptual view of the enterprise AI/ML stack, highlighting the role of DLTs in providing a trust layer. The actors involved in AI/ML solutions, including users, models, and prompts, are discussed along with their attributes and challenges.

Furthermore, the article outlines a DLT-enabled trust protocol for enterprise AI, focusing on user trust and model assurance, model registration, lifecycle management, and runtime authentication and recording. It emphasizes the importance of ensuring users’ trust in AI models and the reliability of generated insights.

In conclusion, the article underscores the significance of DLTs in governing responsible AI usage and facilitating model lifecycle management, with practical consulting and implementation services provided by companies like Chainyard. The author invites feedback and comments for further improvement.

Want to learn more about how Chainyard is transforming enterprises? Talk to an expert.

Originally published at planetstoryline.com and written by Chainyard SVP Isaac Kunkel. Read the full article

Digital transformation initiatives with Blockchain or Decentralized applications (DApps) provide improved efficiency through improved transparency, increased security, faster and cheaper transactions, and using Smart Contracts.

Developing a successful digital strategy for Distributed ledger technology (DLT) initiatives requires careful planning and execution. By identifying key challenges and opportunities, defining the initiative’s scope, developing a roadmap for implementation, addressing legal and regulatory considerations, identifying the right platform, such as Hyperledger Fabric, Ethereum, Besu, or Hedera, and building a solid team, businesses can unlock the full potential of blockchain technology and gain a competitive edge in today’s digital landscape.

Want to learn more about how Chainyard is transforming enterprises? Talk to an expert.

Business process automation has become an essential part of digital transformation initiatives for enterprises. Almost all enterprise IT decision-makers cite process automation as a critical driver of innovation and necessary in achieving business outcomes. While automating business processes is gaining significant attention, are IT professionals investing enough in automating engineering functions related to Security and DevSecOps? Are they methodically addressing security automation to derive maximum benefits from it?

Commonly, organizations receive thousands or even millions of alerts each month that the security staff must monitor. They must guard a much larger attack surface now with the prevalence of several types of devices, apps, and cloud systems. Manually addressing these threats is almost impossible. Automation becomes increasingly necessary to defend the applications and infrastructure against threats that might slip through the cracks due to human error. In fact, according to a global security automation survey, 80% of surveyed organizations reported high or medium levels of automation in 2021.

This article guides security professionals through various potential areas of security automation and the critical role of DevSecOps in CI/CD pipelines.

Opportunities to automate security functions are limitless, like any other business area. However, a methodical approach to identifying automation areas, besides prioritizing the same based on the frequency of activities, is critical to the success of automation initiatives. The following classification can act as a broad guideline for security automation:

Identifying evasive attacks across security layers by consolidating data from the security environments is the goal of extended detection and response solutions (XDR), a critical method of security operations automation. XDR enables security analysts with data that helps them investigate and respond to various incidents, often directly integrated with standard security tools. Essential automation functions to ensure security across endpoints, networks, and cloud systems include:

A range of vulnerability assessments aims to protect against data breaches and ensure the availability of IT infrastructure. They determine if the system is vulnerable to any known vulnerabilities, give severity ratings to those vulnerabilities, and, if and when necessary, offer remediation or mitigation. The issues of a growing pool of vulnerabilities and limited time available to fix these vulnerabilities are addressed by automating vulnerability scanning. These scanners detect vulnerabilities in a variety of network assets, including servers, databases, applications, regulatory compliance, laptops, firewalls, printers, containers, and so on.

Automated management of responses by orchestrating several operations across security tools is the critical function of Security Orchestration, Automation, and Response (SOAR) methods. SOAR enables security teams to effectively triage alerts, respond quickly to cybersecurity events, and deploy an efficient incident response program. Three key areas of automation include:

As businesses move to public cloud environments that provide on-demand access to computing, networking, storage, databases, and apps, security automation becomes increasingly important. The possible security risks generated by manually setting security groups, networks, user access, firewalls, DNS names, and log shipping, among other things, are eliminated by automating infrastructure buildouts. Further, monitoring security configurations across numerous instances of resources across single, multiple, and hybrid cloud systems is another area where automation is quite helpful in a cloud context.

Providing more ownership to development teams in deploying and monitoring their applications is the goal of DevOps. Automation of provisioning servers and deploying applications is critical for DevOps success. Software applications are complex and can potentially have many security issues ranging from harmful code to misconfigured infrastructure/environments. Integrating security processes and, more importantly, automating them as part of the DevOps workflows is the goal of DevSecOps.

Integration of security automation into the CI/CD pipeline processes without adversely affecting development speed and quality is essential. Automation should enable uninterrupted security compliance checks within the continuous development workflow. Besides, as described in the previous sections, various automation areas apply to the Continuous Operations workflows. Critical automation activities include:

Focus on DevSecOps is increasing as per a survey conducted by GitLabs in 2021. DevOps teams are running more security scans than ever before: over half run SAST scans, 44% run DAST, and around 50% scan containers and dependencies. And 70% of security team members say security has shifted left.

As technology advances, the automation methods applicable for security processes also expand. Teams are leveraging advanced automation techniques on one side while also broadening the scope of automation. Following are the popular techniques followed by enterprises in security automation besides classic opportunistic script-driven automation:

It is worth noting that AI/ML is becoming a reality as the technology matures. It is not a surprise to learn that over 3 out of 4 IT executives in a cybersecurity survey conducted two years ago said that automation and AI maximized the efficiency of their security staff.

Our comprehensive services in Cyber Security and Cloud & DevOps help enterprises significantly enhance their security profile. The following customer success stories demonstrate how we applied advanced techniques for improving security and transforming DevOps:

With digital transformation becoming a strategic commitment for businesses, automation in different areas has gained an additional fillip as an enabler. It is now a core priority for all enterprises. Automation eliminates the hassle of repetitive tasks, reduces errors, and increases accuracies. In fact, close to three in four companies cited that they achieved success when automation was their strategic priority (source).

Cloud automation is one instance of automation that involves using automated tools and processes to execute workflows in a cloud environment that would otherwise have to be performed manually by engineers. It enables businesses to take advantage of cloud resources efficiently while avoiding the pitfalls of manual, error-prone workflows.

The global cloud automation market size is expected to triple in the next five years, from 2022 to 2027 (source)

Cloud automation can be used in a range of workflows and tasks. some of the key use cases are given below.

Cloud automation tools help configure virtual servers automatically by creating templates to define each virtual server’s configuration. Other cloud resources, such as network setups and storage buckets or volumes, can be configured automatically. These automation approaches help in agile provisioning of the required cloud infrastructure.

Releasing a new application from development environments to production environments is a critical use case of cloud automation. It becomes even more critical in a CI/CD process where teams make new releases each week. Application deployments are time-consuming besides being error-prone when done manually.

Operating enterprise workloads is time-consuming, repetitive, and painstaking with tasks such as sizing, provisioning, and configuring resources like virtual machines. These tasks are the perfect automation targets that make life easy for IT engineers besides making businesses efficient.

Monitoring cloud infrastructure with the ability to provide immediate responses to incidents is critical to ensuring optimal application performance. Most public clouds offer built-in monitoring solutions that automatically collect metrics from the cloud environment. They allow users to configure alerts based on predefined thresholds.

Administration of infrastructure, network, application, and users become complex in hybrid and multi-cloud environments. Further integration between on-premises and public cloud systems becomes critical in a few scenarios. Cloud automation programs synchronize assets between local data centers and cloud resources. They can automatically shift workloads to the cloud when local infrastructure faces resource limitations. It enables disaster recovery scenarios with a remote DR site mirroring the on-premises environment.

Automation can further unite hybrid and multi-cloud management under a single set of processes and policies to improve consistency, scalability, and speed.

Cloud automation is essential for DevOps maturity, and the two typically go together well. DevOps emphasizes automation and relies on practices such as automated infrastructure-as-code, continuous delivery, and tight feedback loops, all dependent on automation.

DevOps is an evolution of agile practices and applies the innovations of the agile approach to operations processes. It can be considered a missing piece of Agile since a few of its principles are realized when DevOps practices are employed. Cloud automation furthers the benefits and promise of DevOps in an agile environment.

Continuous improvement with timely feedback, a DevOps essential, can be achieved more easily with embedded automation across the workflows.

Automating the cloud security process enables organizations to quickly gather timely information, securing their cloud environments while reducing vulnerability risks. Areas of automation could include:

Cloud security automation can manage risk in time, effectively deal with complexity, and keep pace with changes in the IT environment.

Intelligent automation of cloud operations at scale is the role of AI/ML. Unlike basic script-based automation, machine learning algorithms can learn from operation patterns, make predictions, and mimic human-like remediation. The application of AI/ML can range from auto-detection of anomalies to auto-healing to cloud resource optimization.

AI/ML-driven automation to manage cloud operations is still an evolving field but will play a more significant role in the coming years.

Integrating disparate automation across services and clouds is the primary goal of cloud orchestration. It creates a cohesive workflow to help the business achieve its goals by connecting automated tasks. Infrastructure-as-a-code (IaaC) approach has taken orchestration to higher automation levels by bringing together a series of lower-level automation through configuration-driven techniques.

Cloud orchestration allows users to create an automation environment across the enterprise covering more teams, functions, cloud services, security, and compliance activities.

Several tools help in implementing cloud automation, broadly classified into two categories as follows:

Any large-scale cloud environment needs cloud automation, and it is one sure way to derive the utmost value out of the system. Management tasks that would otherwise consume tremendous time and resources get automated by cloud automation. This empowers organizations to update their cloud environments more quickly in response to business challenges.

Following customer success stories demonstrate how we applied automation techniques to transform cloud and DevOps:

Originally published at CEOWORLD MAGAZINE and written by Chainyard SVP Isaac Kunkel. Read the full article.

As the spending on blockchain technology totaled $6.6 billion in 2021, more companies are figuring out how to get involved. The exact blockchain opportunities are unique to each business’s existing ecosystems, stakeholders, and processes. The business model is critical; with a suitable business model, we see blockchain technology eliminating 90% of redundant tasks, speeding up cycle time by 90%, and reducing administrative costs by half for most of these companies.

The article discusses 5 Ways to Discover Blockchain Opportunities, starting with understanding blockchain and new business models, getting buy-in from all stakeholders, finding the right technology partner, quantifying the business value to all participants, and finally, having a roadmap for continuous improvements.

Want to learn more about how blockchain is disrupting enterprises? Talk to an expert.

In the article titled “Transforming the business of Real Estate Assets through Tokenization“, we have summarized various types of real estate tokens, the challenges it solves, and areas one needs to be aware of when venturing into them. This article focuses on the real estate tokenization process and implementation.

The real estate tokenization process starts with two steps – identifying the deal type and defining the legal structure. Subsequently, implementation involves selecting blockchain technology for storing tokens and choosing a platform where investors can securely purchase digital assets after verifying KYC and AML requirements.

The process begins with deciding on the asset kind, shareholder type, jurisdiction, and relevant rules that have a crucial impact in this initial phase of structuring deals. Generally, issuers choose to tokenize an existing deal in order to provide liquidity to present investors before soliciting money for a new project. Asset owners determine the specific property (or properties) to be digitized. The key considerations in deal structuring would be time-period for ROI, cash on cash return, securing investments, and legal and business formations. These more important details along with specific features/ parameters like rental type, rent start date, neighborhood, year constructed, stories, bedroom/bath, and total units are considered in the process.

The ownership information recorded on paper is transferred to the blockchain during the digitization stage. Security tokens are stored in a distributed ledger, and various actions are encoded in smart contracts. A legal wrapper needs to be created over the individual property to securitize and form an investment vehicle; a step necessary to digitize real estate.

The most common structures are:

Real Estate Fund: A private equity firm invests in a portfolio of properties, and the token symbolizes fund units. Accredited investors or approved institutional purchasers may only leverage the dividends gained from the tokenized investment.

Real Estate Investment Trust (REIT): Investors can create digital shares in a REIT, and token holders have the same rights to the REIT’s operational revenue that traditional investors do today.

Project Finance: Tokenization is a highly effective method of generating funding for projects. Tokens are made available to consumers and accredited investors during this event. Here the token may represent the ownership or future right to use.

Single asset Special Purpose Vehicle (SPV): Tokens represent shares of the SPV under this arrangement, usually a series LLC, for compliance with KYC/AML rules for LLCs, each token investor needs to be registered as a member of the LLC before investing.

After defining the legal structure, the next step is to choose the right technology. The process involves making four choices:

Selecting Blockchain / Token: This involves, choosing the Blockchain on which the token will be recorded, deciding on the token standard to be used, modeling the token data, and defining smart contracts to establish rules on transfer, limits, commissions, etc.

Primary / Secondary Marketplace: Choosing how initial tokens will be mined and provided during the initial offering and defining where and how investors will trade the tokens later.

Custody: Establishing a secure custody system for real estate, including proper maintenance and reporting of real-world updates.

KYC/AML: Verifying investors within regulatory compliance on a periodic basis.

The token generation and distribution are the primary focus of this stage, where several payment options are offered when purchasing tokens. Investors may require a digital wallet, available on the web and mobile platforms, to store the tokens. Investors receive these tokens during a live sale.

Primary Distribution: Tokens are awarded to the investors through this procedure in return for investment capital this is also the first time the tokens are minted.

Post-Tokenization Management: This step includes processes for managing corporate activity, such as shareholder voting and dividend distribution. Smart contracts coded on the token can automate these procedures. Management of the tokens will continue after they have been issued until they reach maturity or redemption.

Secondary Trading: Tokenization increases liquidity in secondary trading. A token holder can swap tokens with another investor on a marketplace, through an exchange, or over the counter.

Real estate tokenization has several advantages that make it a far more efficient investment option than any previously available ones.

We can expect Real Estate Tokenization to open the market for real estate assets enabling efficient movement of the funds and effective management of equity besides providing higher reliability, transparency, and security. Real estate tokenization would be the future of real estate assets with Blockchain technology as the underlying foundation and platform making it more robust, flexible, and secure when compared to the traditional models.

Given these advantages, it is no surprise that the RET market is estimated to become a $1.4-trillion market by 2026 (source). This potential can be fulfilled provided the technology is optimized to overcome existing hurdles and issues. Additionally, expert solution providers in tokenization have an important role in expanding the market and helping real estate players navigate the initial stages of tokenization and minimize the risks and costs associated with it.

Chainyard has vast experience in architecting, building, testing, securing, and operating blockchain-based solutions for some of the largest companies in the world in multiple domains: Supply Chain, Manufacturing, Transportation, Logistics, Banking and Trade Finance, Insurance, Healthcare, Retail, Government and Procurement. Read these customer success stories to find how Chainyard helped clients build enterprise-grade large blockchain-based technology solutions. Chainyard is instrumental in building a platform for procurement innovation, Trust Your Supplier, that enables suppliers to create and maintain a trusted digital identity and selectively share information with a vast network of buyers and partners. Read more here.

The Value of NFTs and Their Impact on Blockchain

Non-fungible tokens, or NFTs, haven’t changed the blockchain industry at its core. However, they’ve had quite a positive impact on bringing more visibility to blockchain and its many uses.

Many people not involved in the blockchain industry have been introduced to NFT crypto-assets in the form of digital art. Although collectible art is certainly one use for these assets and their unique attributes, it’s just one representation of the vast array of ways thatNFT and blockchainsolutions could change modern life.

For example,NFT ticketswould help cut down on counterfeit sales for sporting events, concerts, conventions, and more. Because NFTs can’t be duplicated, the originals would be simple to verify. Plus, each NFT ticket could be programmed via a smart contract to entitle the legitimate ticket holder to special perks like free merchandise or exclusive gatherings.

NFTs are also heating up the video game industry, which shouldn’t be a surprise. Many players would like to own the digital assets they purchase or earn while moving through a game. Using an NFT-in-game premise, those players could retain ownership of any assets beyond the confines of the game. This would enable them to market or sell those assets for a profit later.

Real estate is yet another growth space for the NFT industry.Saving land contracts on NFTs can reduce crimes related to fabricated documents. Perhaps one day,NFTs for landinformation will lessen the need for costly legal intervention in cases of proving who owns which parcel.

The Multifaceted Value Proposition of Blockchain and NFTs

Of course, many people still aren’t sure what makes NFTs particularly valuable. Even those who understand NFTs generally have a little trouble seeing their real-world applications.

So what is the value of an NFT? Below are some of the major reasons NFT and blockchaintechnology stir up so much discussion surrounding profitability and inherent value.

1. NFTs offer provenance and ownership.

Because the token (a.k.a., the NFT) on a blockchain can’t be forged or 100% duplicated, it becomes its own authority. Someone who owns an original NFT doesn’t need to go through a central authority to show the NFT’s authenticity. Removing the need for someone in the middle to legitimize an NFT makes ownership and trading less cumbersome. Accordingly, the value saved is transferred to the value of the NFT.

2. The NFT industry is fostering buyer-seller efficiencies.

More and more, NFT holders can tap into global marketplaces when they want to sell their NFTs. Because NFTs have more liquidity than traditional assets, their holders can recoup investments more readily. Additionally, NFTs support fractional ownership, giving smaller investors access to larger investments by enabling them to own a “slice” rather than an entire asset.

3. Smart contracts can follow NFTs for life.

NFTs can be programmed with smart contracts. Consider the situation of a digital artist who programs an NFT with a smart contract. The smart contract may give the artist a percentage of any sales of the NFT. Consequently, every time the NFT changes hands, the smart contract terms would be enforced immediately.

4. Blockchain and NFTs offer decentralization with security.

By its very nature, blockchain requires none of the centralized entities controlling the markets and marketplaces the world currently relies on. Rather, blockchain and NFTsfoster decentralization and transparency. At the same time, they offer incredible levels of security that would have seemed unfathomable before the advent of blockchain ledgers.

5. NFTs build trust that’s often missing in transactional relationships.

Blockchain ensures a single version of the truth. Period. As a result, systems and assets like NFTs that are built on blockchain are trustworthy. Any transference of blockchain items can be handled with a high degree of trust. And again, there’s no need for a centralized authority to step in to fuel the trust factor. It just exists.

To be sure, NFTs — and blockchain, for that matter — are going through what can only be called the “hype” cycle. Very high expectations are being set for what blockchain and NFTtechnology can and will do. No doubt, some people will be disillusioned along the way to blockchain’s maturity. Nevertheless, blockchain and NFTs are positioned to have a significant and transformative effect on many industries beyond those that have tested the waters so far.